LLM Driven OS What is altumatimOS?

AltumatimOS is a next-generation operating system powered by Large Language Models (LLMs). Unlike traditional operating systems that focus on hardware and application management, AltumatimOS orchestrates intelligent behavior—managing LLM interactions, APIs, user interfaces, tools, and memory with semantic understanding and adaptive logic. It provides foundational services such as memory management, task orchestration, prompt routing, tool invocation, and access control, creating a secure, intelligent interface between language-based reasoning and the digital environment. As the central hub for agentic decision-making, AltumatimOS empowers developers to build advanced AI applications, automations, and workflows—all grounded in natural language understanding and generation.

Hybrid Graph Neural Networks Cognitive Agent Architecture

At the core of altumatimOS is a hybrid reasoning engine that combines the semantic capabilities of Large Language Models (LLMs) with the structural intelligence of Graph Neural Networks (GNNs). The LLM interprets user intent and contextual signals, shaping a dynamic knowledge graph that the GNN reasons over to capture relationships, dependencies, and system state. As data flows through the OS, the GNN continuously refines its understanding, enabling intelligent orchestration, adaptive task execution, and context-aware decision-making. This integration gives altumatimOS a unified, evolving model of the system—allowing it to understand, adapt, and optimize operations with a level of intelligence and autonomy far beyond traditional operating systems.

Seeing is believing.

Get a private look at how you can stop searching for information and start acting on it.

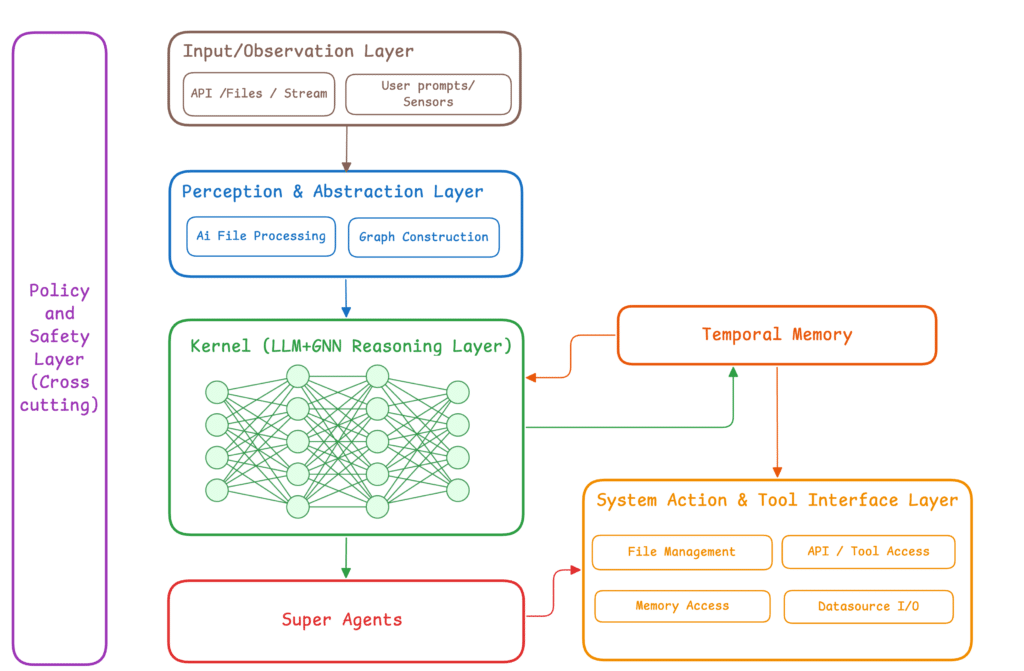

Architectural Revolution Technical Architecture Overview

altumatim OS is an intelligent, modular operating system architected for adaptive decision-making, task orchestration, and dynamic reasoning. At its core lies a hybrid processing engine that combines the contextual strengths of Large Language Models (LLMs) with the structural precision of Graph Neural Networks (GNNs). The architecture is layered to enable fine-grained perception, semantic reasoning, agentic execution, and real-time interfacing with systems and tools — all while enforcing cross-cutting safety and control policies. The following provides a detailed breakdown of the system’s key architectural components:

Input / Observation Layer

The system’s interaction with the external world begins with the Input/Observation Layer, which ingests diverse, asynchronous data sources including APIs, file systems, sensor streams, and user prompts. This layer serves as the sensory substrate of Altumatim OS, capturing both structured and unstructured inputs in real time. It is designed to be modular, allowing plug-and-play integration of new I/O channels without disrupting downstream components. Events arriving at this layer are passed to the perception subsystem for contextual interpretation.

Perception & Abstraction Layer

The Perception & Abstraction Layer processes incoming data through a set of LLM-driven semantic transformation modules. It performs multi-stage abstraction, where raw signals are parsed, interpreted, and converted into high-dimensional representations. These representations are then organized into structured semantic graphs that encode relationships, entities, tasks, and operational contexts. The result is a unified intermediate representation that captures both explicit data and latent meaning — feeding directly into the kernel layer. By combining AI-powered file processing and dynamic graph construction, this layer forms the cognitive grounding for downstream reasoning.

Kernel (LLM + GNN Reasoning Layer)

At the heart of the OS is the Kernel, a hybrid LLM + GNN reasoning engine. The kernel receives the abstracted semantic graphs and performs deep relational reasoning, contextual propagation, and task routing. While the LLM contributes interpretive and linguistic understanding, the GNN specializes in capturing and traversing the relational structure of the knowledge graph — enabling multi-hop inference and complex dependency resolution. The kernel’s design allows it to evolve its internal state in response to new observations, memory retrievals, and feedback from downstream layers. It acts as the central policy-free intelligence core, orchestrating all decision-making with awareness of both global system state and local task constraints.

Temporal Memory

Adjacent to the kernel is the Temporal Memory system — a persistent, graph-based memory architecture optimized for long-term context retention, causality tracking, and state evolution. Unlike flat vector stores, the temporal memory maintains a structured timeline of events, actions, decisions, and system states. This allows the kernel and agents to query not only the current system snapshot, but also its developmental history and causal trajectories. The memory is versioned, temporalized, and queryable by semantic and structural patterns, supporting everything from episodic recall to cumulative learning.

Super Agent

The Super Agent serves as the executive layer responsible for high-level goal decomposition, task delegation, and multi-agent orchestration. It interfaces directly with the reasoning kernel to receive strategic directives and context, and then manages a set of modular, task-specialized agents. These agents are instantiated dynamically and can operate in parallel or in coordination, depending on the complexity of the problem space. The Super Agent ensures coherent execution across agents, resolving conflicts, maintaining system alignment with top-level objectives, and reporting execution feedback upstream. It is the core runtime that brings cognition to life through scalable, modular action.

System Action & Tool Interface Layer

This layer provides the final connection between internal reasoning and the external operational environment. The System Action & Tool Interface Layer exposes standardized APIs for file manipulation, data access, external tool invocation, and real-world interaction. It abstracts underlying system complexity, enabling agents and the kernel to interact with heterogeneous systems through a consistent interface. The layer includes capabilities for direct memory access, datasource I/O, API execution, and sandboxed tool interaction. It also captures execution results, errors, and system feedback — which are routed back to the kernel and stored in temporal memory for future reasoning.

Policy and Safety Layer (Cross-Cutting)

Enforcing security, ethical alignment, and operational integrity is the responsibility of the Policy and Safety Layer. As a cross-cutting concern, it applies globally across all layers of the system, evaluating actions, decisions, and data access in real-time. This layer implements constraints, guardrails, and sandboxing rules that regulate agent behaviors, system calls, and external integrations. It ensures the OS adheres to predefined operational boundaries and is designed to support both static policies (e.g. access control, rate limits) and dynamic ones (e.g. ethical reasoning, intervention triggers). It functions as an oversight layer — not interrupting cognition, but ensuring it remains aligned with user intent and platform safeguards.

AltumatimOS is a next-generation operating system powered by Large Language Models (LLMs). Unlike traditional operating systems that focus on hardware and application management, AltumatimOS orchestrates intelligent behavior—managing LLM interactions, APIs, user interfaces, tools, and memory with semantic understanding and adaptive logic. It provides foundational services such as memory management, task orchestration, prompt routing, tool invocation, and access control, creating a secure, intelligent interface between language-based reasoning and the digital environment. As the central hub for agentic decision-making, AltumatimOS empowers developers to build advanced AI applications, automations, and workflows—all grounded in natural language understanding and generation.

Advanced Features Table

AltumatimOS supports a range of advanced features that make it capable of autonomous behavior, continuous learning, and secure multi-agent collaboration. Below are some of its most critical differentiators:

| Feature | Description | Real-World Example |

|---|---|---|

| Multi-Super Agent Collaboration | Super Agents can communicate, coordinate, and share knowledge or strategies when permitted by policy. | A Legal Super Agent shares compliance constraints with a Finance Super Agent to ensure regulation-aware decisions. |

| Reinforcement Learning From Ai Feedback (RL AiF) | Super Agents analyze outcomes and feed success/failure signals back into the cognition layer for learning and policy updates. | A DevOps agent learns to delay non-critical updates during peak traffic after receiving negative RL feedback for previous disruptions. |

| Dynamic Task Allocation | Super Agents and Multi Agents can reassign tasks in real-time based on availability, specialization, workload, or failure of another agent. | If one NLP agent is overloaded, another with similar capabilities takes over without human intervention. |

| Permissioned Sharing | Knowledge, experience, or learned models are shared across Super Agents only when allowed by explicit policies or security layers. | A medical Super Agent shares anonymized diagnostic patterns with a research Super Agent, respecting patient privacy laws. |

| Agent Autonomy | Low-level agents have enough context and logic to complete recurring or predefined tasks without needing higher-layer approvals. | A data-cleaning agent autonomously processes incoming CSVs and flags anomalies without involving the Super Agent. |

| Context-Aware Decision Making | Decisions at all levels (Agent → Super Agent) are guided by both real-time and historical context maintained in the memory graph. | An AI assistant offers different suggestions for the same query depending on the user’s current task and previous behavior. |

| Hierarchical Reasoning | Tasks can be decomposed into subtasks, where reasoning happens at multiple levels—abstract at the top and tactical at the bottom. | A research Super Agent breaks down a scientific question into literature review, data modeling, and hypothesis testing subtasks. |

| Temporal Memory and Experience Accumulation | System-wide memory allows agents to learn from not only current context but also historical decisions, past RL rewards, and event timelines. | A user-facing AI recalls previous conversations to maintain tone, avoid repetition, and build rapport. |